- Flip The Tortoise

- Posts

- All Your Terawatts Are Belong To Us

All Your Terawatts Are Belong To Us

Welcome to internet’s AI hyper-consumption era.

TGIF!

If you enjoy this post or have an opinion about it, hit reply and let me know what you think.

Cheers,

-Growdy

When I started writing my automotive newsletter, I knew that in addition to covering the current state of the auto industry, the transition to EVs, and the shift of global automotive production dominance from the US and EU to China, I would also need to highlight changes in global energy consumption and production.

Because any time you mention the adoption of electric vehicles, some asshole in the room asks, “But what about the grid?”

What about the grid, indeed.

We need more electricity

Adding complication to the energy transition discussion, an already over-politicized and hotly debated topic, AI uses an immense amount of energy.

When thinking about all of the electicity needed to power our AI meme image generation, let college students to cheat on their homework, and permit Elon Musk to do whatever his ketamine soaked brain wants to do with Grok (like make pornographic deep fakes of Taylor Swift), even in these early AI days—I am reminded of a quote from the movie Armageddon. It is like a vicious life-sucking bitch from which there is no escape.

The actual energy needs are not easily calculated. And the CO2 emissions outputted are equally elusive. This is AI’s dirty little secret.

WIRED magazine articulated the current AI energy predicament like this: “One unfortunate side effect of this proliferation (of AI) is that the computing processes required to run generative AI systems are much more resource-intensive. This has led to the arrival of the internet’s hyper-consumption era, a period defined by the spread of a new kind of computing that demands excessive amounts of electricity and water to build as well as operate.”

What does AI’s excessive electricity appetite look like?

For context, there are roughly 30,000 terawatt-hours (TWh) of electricity consumed globally each year.

And nearly 30% of Americans, as an example, are using AI tools already.

Some estimates suggest that AI could account for nearly half of all global data centre power usage by the end of this year.

Data centres were already major electricity sponges. In 2024, estimated global data center electricity consumption was around 415 TWh, or approximately 1.5% of total global electricity usage.

The International Energy Agency forecast that AI would require almost as much energy by the end of this decade as Japan uses today.

As AI electricity consumption grows, it will begin to rival the levels currently required by heavy industry and for heating.

Where will all those incremental terawatt-hours come from?

All over the damn place.

Big tech companies’ needs for electricity are causing them to look under some interesting rocks.

This sorta explains (but does not justify) why Elon’s xAI attained special permits to power its supercomputer facility in Memphis, Tennessee, using natural gas-burning turbines. Which, if you were wondering, isn’t great for the air quality in Memphis.

This is why Microsoft is going to resurrect Three Mile Island, the site of the worst nuclear accident in US history.

This is why Google made a strategic investment in Energy Dome, a company that specializes in grid-level energy storage using CO2 battery technology.

Last year, Altman posted on X that OpenAI believes “the world needs more AI infrastructure--fab capacity, energy, datacenters, etc--than people are currently planning to build.”

Yep, that sounds like hyper-consumption to me.

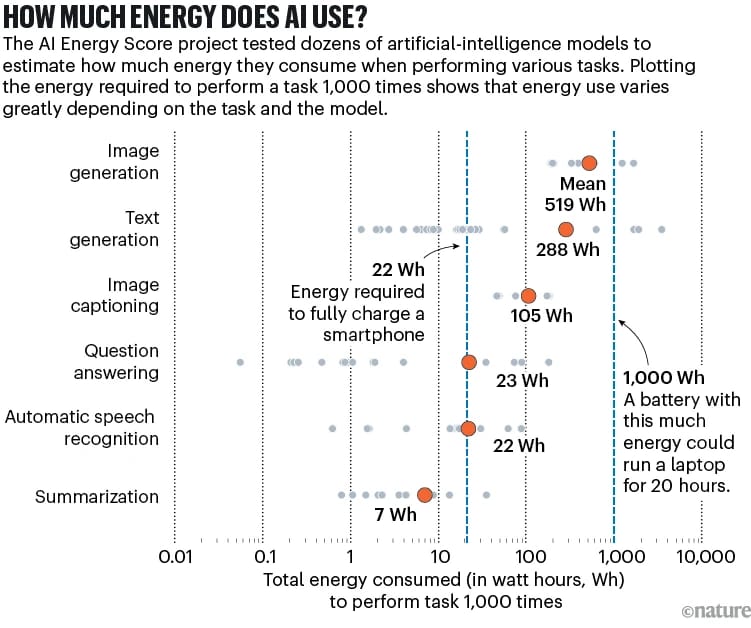

Source: HuggingFace AI Energy Score Leaderboard

Some are still concerned that our current methods for calculating these excessive energy needs aren’t accurate. And that our AI energy requirements might be under-estimated.

Yesterday, Google stepped up to that challenge and shared how much energy its AI chatbot tool, Gemini, uses to execute a single query.

Google suggested that “the median Gemini Apps text prompt uses 0.24 watt-hours (Wh) of energy, emits 0.03 grams of carbon dioxide equivalent (gCO2e), and consumes 0.26 millilitres (or about five drops) of water."

That was lower than many folks expected.

But no matter how you cut it, we’re gonna need more electricity.

And before you get all dreadfully apocalyptical in your late-night wakefulness, worrying that the machines are going to turn us into Matrix-esque pink capsule batteries for their own needs, there is an ironic opportunity in AI-land.

The unexpected bright spot in this conversation is how AI might be able to help us solve the problem.

As the Financial Times explained, “Finding new materials, catalysts or processes that can produce stuff more efficiently is the sort of ‘needle in a haystack’ problem that AI is ideally suited to.”

Although we are already deep into the internet’s hyper-consumption era, AI research and the application of AI technology to the energy sector may help us find a way clear of it.

“AI will enhance the ways humans experience the world.”

– Jeff Bezos, Founder of Amazon

The Simplest Way To Create and Launch AI Agents

Imagine if ChatGPT and Zapier had a baby. That's Lindy.

With Lindy, you can build AI agents in minutes to automate workflows, save time, and grow your business. From inbound lead qualification to outbound sales outreach and web scraping agents, Lindy has hundreds of AI agents that are ready to work for you 24/7/365.

Stop doing repetitive tasks manually. Let Lindy's agents handle customer support, data entry, lead enrichment, appointment scheduling, and more while you focus on what matters most - growing your business.

Join thousands of businesses already saving hours every week with intelligent automation that actually works.